|

As noted elsewhere, I am a little-D democrat and a big-R Republican. How can I hold two such divergent views? Because I believe in the power of unfettered human imagination and, ultimately, in the wisdom of crowds over time.

By “over time,” I mean in the long term. Crowds in the short term can be fickle and stupid. Sometimes, they are subject to madness and stampedes that end up killing dozens or hundreds of people on their periphery—especially when they brush up against a stone wall or a blocked door. Sometimes, crowds turn into mobs wielding crude weapons and venting their anger and hysteria on harmless people. But that is the short term. In the long term, tribes or societies or nations can reach an equilibrium of consensus, weigh possible choices, and find a path forward that works for most of their members.

Life in the United States—and in much of the developed world—today is materially and artistically richer because we have an open economy with free markets. This is in contrast with the command-and-control economies that grew out of Marxist philosophy and socialist principles, as echoed in current progressive thought. In this alternate view, the government, or the party in power, or some other overarching group of technical specialists and social scientists holds a vision of what society should be like, what its members should need and want, and then works to provide those necessities.1

There have been notable cases of relatively small groups of experts achieving great technological innovations: Thomas A. Edison’s original Menlo Park laboratory, AT&T’s Bell Labs, the Manhattan Project, and the Palo Alto Research Center. All were places where engineers and scientists with interest in a particular specialty—electricity and practical invention, telephony and radio communications, atomic physics and energy, or computer applications—gathered to play in the field of science. Whether they were looking for a certain result, such as the first fission bomb, or just seeing what new approaches could bring to a novel technology, they all achieved great things. But these were private groups, except for the Manhattan Project, and while the work that they did was proprietary, their goal was to bring forth inventions that would be useful to society as a whole—yes, even the atomic bomb.

But except for the Manhattan Project, none of these groups the claimed sole authority to delve into their particular specialty, to produce inventions that could not be challenged or surpassed by others in society, or to be the only voices heard in that specialty. Even the Defense Advanced Research Projects Agency, a function of the U.S. government, built the original internet so that scientists and engineers could share, comment, and build on technological advances. Out of that first backbone network has grown the “enterprise of science” that now girdles the developed and undeveloped world, sharing knowledge and driving a technological revolution in physics, chemistry, biology, medicine, and every other science—along with literature, law, social science, and commerce. That one technology has put us all on an express elevator to the future.

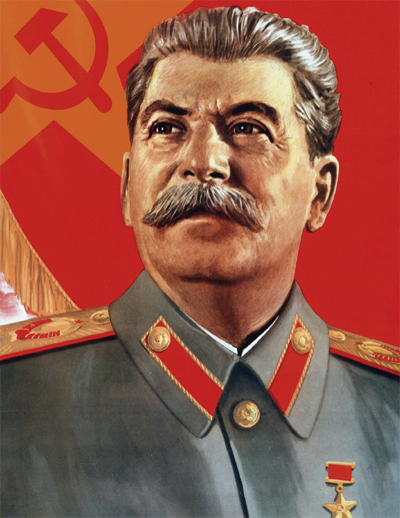

Contrast this with the sort of closed system promoted by the Soviets and the socialist Chinese as described by Aleksandr Solzhenitsyn’s In the First Circle. Scientists, engineers, and technical support people work there in closed facilities—sometimes structured as prisons for useful but otherwise untrustworthy minds—at the command of the government or party and producing only the results that the government can tolerate. It’s an extension of the gulag, even when the work is not done behind locked doors.

In a command-and-control economy, production—and, more importantly, innovation—are determined by a preselected group of specialists, technical experts, and all too often party functionaries. How much steel or aluminum will be made and shipped to fit a five-year plan written four years ago? How many shoes will be made and shipped to a city with a population of a million and a half people, whose feet fall into a statistical average of so many men and women, adults and children? How much bread does the average person need to eat—perhaps just to survive—and so factor into the annual planting for wheat production? All of these questions are left to people who decide for the nation what the nation needs and wants. A small plot of land might be left over for individual farmers to grow their own vegetables or to feed their own chickens—but nobody is invited to go into the produce or poultry business. And no one can invent a new way of making steel and set up a business to revolutionize production.

In an open market, cozy and predictable businesses like steelmaking are upset all the time. During the 19th century, the Bessemer process for turning pig iron into steel used a blast of air blown over the surface of the molten steel to burn off impurities. This process built the mills of the Ohio Valley. Then, after World War II, a Swiss engineer developed the Basic Oxygen Process, also known as the Linz-Donawitz process, after the Austrian towns where it was first adopted. LD steelmaking inserted a lance into the molten steel and burned off the impurities with pure oxygen. It made better steel and took over the market, and the Ohio Valley started to die.2

A command-and-control economy is basically conservative. The bureaucrats who run the five-year plan, and make investments in plant and people to meet it, naturally want to protect those assets. They don’t have any interest or the time to sample public opinion, keep on top of new inventions, and risk upending their industrial base to bring cost savings and technical improvements to their economy. An open market with a capitalist base is more adventurous. People with money to invest in the hope of multiplying it—yeah, basic greed and self-interest—don’t care what tidy national apple carts they might upset. They are attuned to public attitudes and pounce on new trends in both the public appetite and the technology that might serve it. They are investing their own money, or that of others they can convince to invest with them, and if they make a mistake and lose … well it’s only money. Failure is a necessary part of growth—because no one has an absolutely clear crystal ball. And the damage is limited when the bets are made by small entrepreneurs instead of national governments.

If the computing and telecommunications technology of the past half century had been in the hands of a U.S. cabinet department or national institute—or even with Bell Labs—we never would have had the personal computer (two guys at Apple plus two guys at Microsoft), the personal music system (Sony and Apple again), or their synthesis in the smartphone (Apple and a lot of copycats). Such personal use of technology would not have been considered nationally important, and so these products worth billions if not trillions in today’s economy would never have seen the light of day. I would still be writing this article on a typewriter—with no place to publish it. You would still be making telephone calls from an instrument with a dial and a handset—and connected to the world with a wire.

Is the process of capital investment and free market distribution messy? Yes. Is it sometimes wasteful? Of course, both for the products it brings forth that fail and for the stable industries it disrupts and destroys. Personally, I never saw the attraction of either the Pet Rock or Pop Rocks—those hard candies that fizzle in your mouth. They were short-lived innovations, actually joke products, that someone thought would make a buck. A serious Soviet economist would have dismissed them out of hand. But still, some people made a living, however briefly, in selecting smooth river stones, washing and packaging them, and selling them as a novelty item. Some people made a living, however small, by mixing sugar, flavoring, and whatever made the candy fizz, packaging and selling it, and raking in the bucks. These people got a living wage, fed their families, maybe bought a house, paid taxes, and supported the rest of us in the economy. Who is to say that this is a worse choice than investing in a twenty-fourth brand of deodorant or a steel plant that is only marginally competitive with the imported Chinese product?

The point is, a free market supported by capital investment leaves the decision-making up to the wisdom of the rest of us, rather than to some embedded expert in a command-and-control economy. If a product or innovation is useful, it will find a use, establish itself, and perhaps change lives—not because some expert knows this ahead of time, but because the wisdom of individuals acting in a group demonstrates that it is so. The expert might have a hunch or an idea or a subtle aversion, but it is patently unfair—not to say unproductive—to give this individual or preselected group the final say in how the economy will operate. Only the market alone, messy and fickle as it is, can establish long-term value and benefit for the rest of us.

Or so I believe.

1. This is echoed in Bernie Sanders’s comment during the 2016 election that the U.S. economy doesn’t need twenty-three brands of deodorant. His premise was that providing this level of choice to the average consumer is a waste of resources, and the money invested in developing, manufacturing, and distributing those extra products could be better spent by society—meaning, according to the plans and programs he and his party have in mind. I still ask, would he be happy using Secret—“Strong enough for a man but made for a woman”—or how about my brand, which has a sailing ship on the package? Those are both popular brands. Why shouldn’t everyone be happy with them?

2. In the story of steel, U.S. productive capacity helped the Allies win the war, and this country emerged on top of the global economy. But our steel mills were left standing with old technology: Bessemer process furnaces and finishing by casting the steel into ingots, heat-soaking them, and then rolling them out into slabs, sheets, beams, and wire coils. Meanwhile, the mills of Germany and Japan had been bombed flat during the war; so in their rebuilding during the 1950s they could start fresh with LD process mills and new finishing technologies like continuous casting of slabs and sheets from molten steel. The U.S. mills had to move quickly to scrap their old equipment and rebuild with the new processes—but not quickly enough. So the U.S. market was flooded with better, cheaper European and Asian product, and basic steelmaking declined in this country. You snooze, you lose.