|

I finally got to watch the movie The Circle with Netflix. It’s a chilling vision of where immersion in social media might take our culture, and that makes it a cautionary tale. The vision of people living in full public exposure, where “secrets are lies,” where personal privacy is a condition of public shame, reminds me of Yevgeny Zamyatin’s dystopian novel We, which I’m sure The Circle’s original author, Dave Eggers, must have read.

The movie is a depiction of peer pressure brought to perfection. By binding all of connected humanity into one hyperactive zeitgeist, this fictional social media software creates a howling mob. But does my analysis go too far?

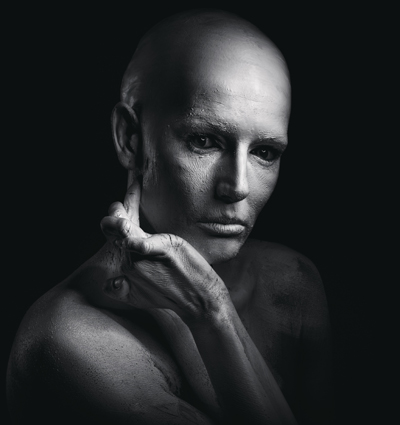

I am reminded of the nature of the culture we’ve created in America. We are cooperative as much as we are competitive, especially among the younger generation brought up with the teachings of Howard Zinn and the new “woke” enlightenment. When socialism finally comes to this country, it won’t be with the armbands and jackboots of Nazi Germany, nor with the grim-faced commissars of Soviet Russia. Instead, we will coerce people with the smiling positivism of the Romper Room lady: “Be a Do-Bee, not a Don’t-Bee!”

Smiling, positive peer pressure—supported by the veiled coercion of local laws and proprietary rulemaking—have accomplished a lot in my lifetime.

For example, when I was growing up almost everyone smoked,1 and they smoked almost everywhere. My parents were both pack-a-day Camel smokers. I started smoking a pipe—never cigarettes—during my freshman year in college, and this was three years after the Surgeon General’s report in 1964 on smoking and health. We lit up in restaurants, in cars, in every public space, and in every room in the house. The ashtray was a familiar artifact in every home and office.

To counter this, there was no national ban on cigarettes, no repeat of the Volstead Act enforcing the prohibition on alcohol. Instead, we got warnings citing the Surgeon General printed on every package of cigarettes. We got increased excise taxes on every pack, which made smoking prohibitively expensive for many people but especially the young. We got laws making the sale of cigarettes to minors illegal, in an attempt to stop them from ever taking up the habit. And the age limit in California was recently raised from 18 to 21 years old, comparable to limits on alcohol sales.

To change public habits and perceptions, we started by introducing both smoking and non-smoking sections, at first in restaurants. This was followed—at least in California, but soon after almost everywhere—with complete bans on smoking in restaurants, bars, and other public spaces, including indoor workplaces. As of 2008, you cannot smoke in a car with a minor present, under penalty of a $100 fine. As of 2012, California landlords and condominium associations can ban smoking inside apartments and in common areas. And smokers who go outside a public building to practice their vice must stay at least 20 feet from an entrance doorway, an open window, or even a window that can be opened.

This flurry of taxes, laws, and private rulemaking was accompanied by advertising campaigns that showed the damage smoking did to people’s lungs, hearts, and skin. It was supported by statistics about the occurrence of cancer and other life-threatening diseases, not just for those who smoked but for anyone nearby. Smoking became not just an unattractive, dirty, and smelly habit, it became a form of assault on the health and safety of others. In 1998, the attorneys general of 46 states sued the country’s four largest tobacco companies over tobacco-related health care costs that their states paid under Medicaid; they reached a settlement under which the companies curtailed their advertising efforts, agreed to pay perpetual annual amounts to cover health care, and help fund the efforts of anti-smoking advocacy groups.

Sure, you can still buy cigarettes in this country—if you have the money, and a place to enjoy them, out of sight of every other living person.

In my lifetime, I have seen a parallel revolution in the handling of garbage. It used to be that everything you didn’t want went one or two places. Your food wastes went into the garbage disposer in the kitchen sink, to be ground to pulp and flushed into the sewer lines or the septic tank.2 Everything else went into a paper grocery bag—later a plastic grocery bag—in the can under the kitchen sink, to be emptied into the bigger galvanized cans in the garage, taken to the curb once a week, picked up by the municipal garbage truck, and hauled away to some distant place out of sight and out of mind. Where the hauling ended up was a matter of local practice. Some communities dumped their “municipal solid waste” in landfills. New York City put their waste on barges, hauled it out to sea, and dumped it into the abyss of the Hudson Canyon.

Municipal solid waste was anything you didn’t want or no longer needed. It included food scraps you couldn’t be bothered to feed into the garbage disposer; waste paper, newspapers, wrappers, and corrugated boxes; soda cans and any pop bottles some kid hadn’t already taken back to the store for the two-cent redemption; broken furniture and appliances; old paint and construction debris; and dead batteries, worn-out car parts, and used motor oil and filters. If you were through with it, it went out to the curb.

And then the recycling movement started, prompted at first by environmental concerns about landfills and pollution of the environment.3 At first, we saved our wastepaper to be recycled into newsprint and cardboard boxes—in an effort at saving the trees.4 Then we were recycling those aluminum cans, any glass and plastic bottles that didn’t have restrictive redemption value—like California’s five and ten cents on plastic soda bottles, based on size—and other distinguishable and potentially valuable materials. Originally, each of these commodities had to go into its own bin. But now the recyclers take paper, metal, and various grades of plastic in the same bin, because they will shred it all and separate the bits with magnets and air columns.

In the greater San Francisco area, we began seeing metal tags on storm grates reading “No Dumping – Drains to Bay,” usually with a decorative outline of a fish, to keep people from putting motor oil, battery acid, and other pollutants into the water supply. And it’s illegal now to dump these things—along with dead batteries, discarded electronics, and broken furniture—anywhere but at certified recycling centers. More recently, in 2016, California began requiring businesses and housing complexes to separate and recycle their organics—the things we used to put into the garbage disposer—to be hauled away for composting.

So now, instead of dumping everything down the sink or into the garbage can, as we did when I was a child, we rinse out our food and beverage cans and bottles and keep them in the closet until the recycle truck comes. And we put our coffee grounds, vegetable peelings, and table scraps in a basket—my wife used to keep them in the freezer, to cut down on odors and insects, a practice I continue—and hold them until the composting truck comes. We hand-pick, sort, and pack our garbage to go off to the appropriate disposal centers.

All of this change was accomplished not just by laws and fines but more directly with a change in social norms, similar to the anti-smoking campaigns, intended to make those who failed to sort and wash their garbage not just careless but failing in their social duties—a Don’t-Bee—subject to public ridicule. Of course, the movement has not been without its downsides. People who can’t be bothered to take their garbage to the appropriate recycling centers now dump it at midnight on any vacant lot or in someone else’s yard. And alert criminals, who know what the recycling centers will pay for valuable commodities like refined copper, are stripping the wiring out of public parks and farmers’ pumping stations, also at midnight.

Social engineering through a mixture of taxation, regulation, and peer pressure is now an accepted tool in this country. Smoking and garbage sorting are subject to popular taboos. Similar peer pressure—if we let it happen—will soon govern what, where, and how we can drive; where and how we can live; what we can eat; and how often we must exercise. Social media, with its built-in opportunities for social preening and its chorus of social scolds, will only accelerate the process of making us all Romper Room children.

China, which once sought to ban the internet, has now co-opted it—along with aggressive DNA profiling and new technologies like artificially intelligent facial-recognition software—to institute a Social Credit System as a means of public surveillance and control. In their plans, the system by 2020 will index, among 1.4 billion people, each citizen’s social reputation and standing based on personal contacts made, websites visited, opinions published, infractions incurred, and other individual performance variables. People with high ratings and demonstrated social compliance will be rewarded with access to good jobs, financial credit, travel priorities, and favorable living conditions. People with low ratings and recorded infractions will be massively inconvenienced and their futures curtailed.

I’d like to say it can’t happen here, but I don’t know anymore. I can say that, although I try to live a good and proper life, obey the rules, pay my taxes, and not hurt anybody’s feelings, this kind of surveillance and control is out of bounds in a free society. If social media turns into the kind of howling mob predicted by The Circle, then I am out of here. I will take my socially unapproved weapons and move to some socially unacceptable place to join the next social revolution. I will become the ultimate Don’t-Bee.

1. Except for my late wife. I met her just after I had quit smoking, for the last time, and a lucky thing that I did. Her expressed view was “kissing a smoker is like licking a dirty ashtray.”

2. My mother, having trained as a landscape architect, set aside a place at the far edge of the back yard for her compost pile. (Now that I think of it, her mother kept a place like that, too.) Here we carried out and dumped our grass clippings, coffee grounds, vegetable peelings, egg shells, and anything else organic that would decompose into a nice, rich mulch for the garden. But not the raked leaves: those we piled against the curb and ceremoniously burned as an homage to autumn—except you can’t do that anymore because of air pollution laws.

3. I almost became part of this movement professionally. Back in the 1970s, when I was between publishing and technical writing jobs, I answered an ad from a man who had a system for “mining” municipal solid waste. He had studies showing that garbage was the richest ore on Earth, with recoverable percentages of iron and nickel, copper and brass, aluminum, and glass that exceeded the per-ton extraction at most modern mines. After separating these valuable commodities, he proposed pelletizing the paper, organic solids, greases, and oils into a fuel that could be burned at high temperature to generate electricity. Garbage was, in his view, literally a gold mine. The only hitch was that, instead of hauling dirt out of an open pit to a nearby processing plant or smelter, this new kind of ore had to be collected in relatively small amounts by sending a truck house to house. It wasn’t the value of the thing itself that was in question but the small quantities and the logistics involved.

4. Of course, no one harvests old-growth redwoods and pine forests to make paper, not even high-quality book papers and artist’s vellum. As a young child on family boating trips to the upper reaches of the Hudson River, I saw pulp barges coming down to the paper mills around Albany from pulpwood farms in Quebec. Our paper is grown on plantations as a renewable resource, like Christmas trees. The mantra about “saving a tree” is akin to abstaining from eating corn in order to save a cornstalk. We farm these things.