The rates of increase between a slope and a curve have different mathematical properties. A steady slope is usually generated arithmetically by adding single units (1, 2, 3, 4 …), while a curve is usually generated exponentially by adding squared or cubed units (2, 4, 8, 16 …). A parabolic curve, at least the part that proceeds upward from its low point, is generated by the formula ax2+bx+c,1 and it can rise really fast. My contention here is that our technological advancement since about the 17th century has been on a parabolic curve rather than a slope.

In ancient times—think of Greece and Rome from about the 8th BC, the first Olympiad for the Greeks, or the mythical founding of their city for the Romans—there was technological advancement, but not even a slope. More of a snail’s pace. The Greeks had their mathematicians and natural and political philosophers, like Pythagoras and Aristotle, but aside from writing down complex formulas and important books which probably only a fraction of the populace bothered to read, their works did not materially improve everyday life. The Greeks never united their peninsula politically, for all their concept of democracy, remaining stuck at the tribal and city-state level of conflict. And from one century to the next, they drank from the local wells, shat in the nearby latrines, and traveled roads that washed out every year with the spring floods. They built in marble the temples of their gods, but otherwise the average people lived in houses of wood and mud brick not much different from those of their predecessors in Homeric times, five centuries earlier.

The Romans did somewhat better, being short of actual philosophers but abounding in practical engineers. They developed a democratically based political and military system that united their peninsula and went on to conquer most of their known world. They built huge aqueducts to bring fresh water into their cities from distant springs, underground sewers to take away human wastes, and roads dug many layers deep into the ground that could reliably move goods—and armies—from one end of the empire to the other. They built temples and palaces in marble laid over brick but also invented a synthetic stone, concrete, that their engineers originally made from a volcanic ash known as “pozzolana.” Common people in the city lived in apartment blocks called insulae, or “islands.” They bathed regularly and made a civic virtue of the practice. Life was better under the Romans, but technological advancement was still glacially slow.

Rome, at its fall in the Western Empire during the 5th century AD, was technologically not much different from the Rome of Julius Caesar, five centuries earlier. And that fall—due largely to climate change and the ensuing barbarian migrations—plunged Europe into a Dark Age that saw small advancement in any of the arts, although we did get some practical technologies like the wheeled plow and the stirrup. Those, along with gunpowder adopted from Chinese fireworks and movable type adapted from the Chinese by Gutenberg in the 15th century for printing bibles, carried us through to the 16th century, the time of the Tudor reign in England or the Medici in Italy.

After that, technologically speaking, all hell broke loose.

Some might credit Rene Descartes and his inventions of analytic geometry and the scientific method, based on observation and experiment; or Isaac Newton and his invention of the calculus (also developed independently by Gottfried Leibniz in Germany) and his studies of gravity and optics; or Galileo and his work in physics and astronomy. Intellectually, it was a fruitful century.

But from an exhibit that my late wife prepared at The Bancroft Library years ago, I learned that a more immediate change came about with the exploration of distant lands and when the European trading companies set up to exploit them began importing coffee and tea into the home market in the 17th century. Before then, people didn’t drink much water because of rampant contamination; so instead they drank fermented beverages—sweet wines, small beer, and ale—because alcohol helped kill the germs, although they didn’t think about it in those terms. So they would sip, sip, sip all day long, starting at breakfast, until everyone was half-plotzed all the time. But then along came coffee and tea, which were good for you because you had to boil the water to make them. Everyone brightened up and began thinking. The denizens of Lloyd’s Coffee House in London invented insurance companies to protect the sea trade, which required estimates of risk and probability, and that led to a whole new branch of mathematics and the spirit of investment banking.

Put together scientific investigation with the widespread availability of printed books and the clear minds to read them, and we’ve been on that rapidly rising parabolic curve ever since.

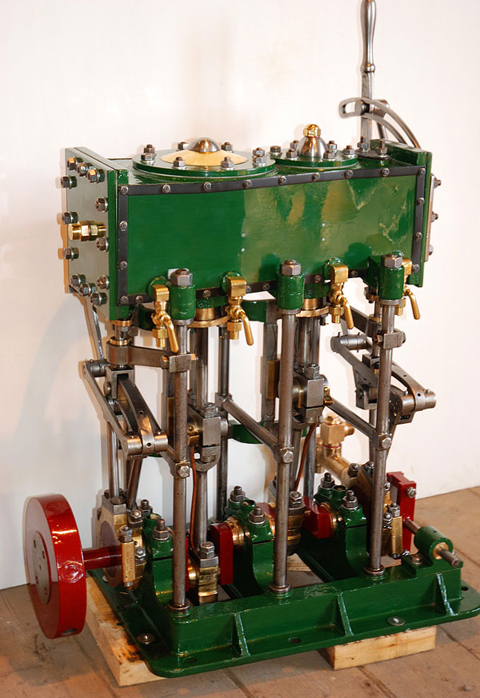

We are just over three hundred years from the first steam engine, patented in 1698 to draw water from flooded mines. In the time since then, the engine has gone from triple-condensing cylinders to turbine blades. And that is the least of our advances. This year, we are just two hundred years from the first primitive electric motor, built by Michael Faraday in 1821. And now we have motors both small and large driving everything from trains, elevators, and cars to vacuum cleaners and electric shavers.

In my lifetime, I have seen music go from analog grooves cut into vinyl disks and magnetic domains on paper tape to digital representations stored on a chip, and photography go from light-sensitive emulsions on film and paper to similar—but differently structured—digital sequences on chips. My electric typewriter—again driven by a small motor—has gone from impact-printing metal representations of the alphabet on a sheet of paper to storing different digital sequences on that same chip in my computer. All of this puts the stereo system, camera, and typewriter I lugged off to college fifty years ago into a single device that started out on my desktop, migrated to my laptop, then moved into my hand inside a smart phone, and now lives on my wrist instead of a watch. And the long-distance call I made every week from college to my parents at home was once a direct wire connection established by operators closing switches; it would now be a series of digitized packets sent out through the internet and assembled by computers at each end of the conversation. Gutenberg’s process for printing words on paper is now embodied in the photo-masking of electronic circuits on silicon chips. And we’re not done yet.

In 1943, Alan Turing invented the first computer, designed to crack the Enigma code for the Allies. In 2011—just 68 years later—that machine’s linear descendent, IBM’s Watson, was playing although not necessarily consistently dominating the game of trivia based on history, culture, geography, and sports, dependent on linguistic puzzles and grammatical inversion, known as Jeopardy! While that was a stunt, similar “artificially intelligent” systems based on the Watson design are now being sold to businesses to analyze and streamline operations like maintenance cycles and supply chain deliveries. They will take the human element, with its vulnerability to inattention, imagination, and corruption, out of processes like contracting and medical diagnosis. Any job that involves routine manipulation of repetitive data by well-understood formulas is vulnerable to the AI revolution.2

Add in separate but related advances in materials, such as 3D printing—especially when they learn how to make metal-resin composites as strong as steel—and you get disruption in much of manufacturing, along with the global supply chain.3

Any theory of economic value that depends on human brawn—I’m looking at you, Marxists—or now even human brains is going to be defunct in another half century. That’s going to be bad news for countries that rely on huge populations of relatively unskilled hands to make the world’s goods, like China and India.

Intelligent computers are also able to do things that human beings either cannot do or do poorly and slowly. For example, in November 2020, Nature magazine reported on an AI that can predict and analyze the 3D shapes of proteins—that is, how they fold up from their original, DNA-coded amino acid sequences—almost as well as the best efforts of humans using x-ray crystallography. And this was just 20 years after the first sequencing of the human genome using supercomputers, and only 66 years after the first glimpse of the DNA molecule itself using x-ray crystallography. Knowing the structure and thereby the function of a protein from its DNA sequence is a big deal in the life sciences. It will take us far ahead in our understanding of the chemistry of life.

Ever since the 17th century, our technology has been riding a curve that gets steeper every year. And the progress is not going to slow down but only get faster, as every government, academic institution, and industrial leader invests more and more in what I call this “enterprise of science.” Anyone who reads the magazines Science and Nature can see the process at work every week.4 We all stand on the shoulders of giants. We stand on each other’s shoulders. We build and build our understanding with each advance and article.

This rate of increase might be slowed, marginally, by a global depression. We might be set back entirely by a nuclear war, which might revert our technological level, temporarily, to that of, say, the telegraph and the steam engine. But it will only be stopped, in my estimation, by an extinction event like an unavoidable asteroid or comet strike, and then so much of life on this planet would die out that we humans might not be in a position to care.

As to where the curve will lead … I don’t think even the best science philosophers or science fiction writers really know. Certainly, I don’t—and I’m supposed to write this stuff for a living. The next fifty years will take us in perhaps predictable directions, but after that the effects on human economics, culture, and society will create an exotic land that no Asimov, Bradbury, or Heinlein ever imagined. Fasten your seat belts, folks, it’s going to be a bumpy ride!

1. That’s a quadratic equation. And no, I don’t really understand the formula’s properties myself, having nearly flunked Algebra II.

2. But no, the computer won’t be a “little man in a silicon hat,” capable of straying far outside its structural programming to ape human intellect and emotions—much as I like to imagine with my ME stories. And it won’t be a global defense computer “deciding our fate in a microsecond” and declaring war on humanity.

3. It’s become a commonplace that the U.S. lost its steelmaking industry first to the Japanese, then to the Chinese, because they were more advanced, more efficient, and cheaper. Not quite. This country no longer makes the world’s supply of bulk steel for things like pipe, sheets, beams, and such. But so what? We are still the leader in specialty steels, formulations for a particular grade of hardness, tensile strength, rust resistance, or some other quality. Steelmaking in our hands has become exquisite chemistry rather than the bulk reduction of iron ore.

4. For example, just this morning I read the abstract of an article about adapting the ancient art of origami to create inflatable, self-supporting structures that could be used for disaster relief. I read and I skim these magazines every week. And frankly, some of the articles, even their titles, are so full of references to exotic particles, or proteins, or niches of mathematics and physics that I can only guess as to their subject matter, let alone understand their importance or relation to everyday life.