Just like the poor, the mad have always been with us. They only present differently in society at each stage because we keep evolving our labels, diagnoses, solutions, and attitudes for dealing with brains and personalities that do not function within the societal parameters set by the rest of us “normies.”1

This is one reason you don’t see references to “schizophrenia,” “bipolar disorder,” or other psychological conditions in ancient or even near-modern literature. The first concepts of psychology as a science really came about in the late 19th century with practitioners like neurologist Sigmund Freud in Vienna and pure scientists like Wilhelm Wundt in Leipzig. Terms, diagnoses, and approaches have been evolving ever since and are still being continually re-examined and refined today. The science, such as it is, moves back and forth between seeing psychological conditions as issues originating in the realm of ideas (e.g., bad thinking, childhood mistreatment, mistaken perceptions of reality, or spiritual or moral dysfunction) and in the realm of brain structure and biology (e.g., physical trauma, inherited or acquired disease, and maladjustment of the brain’s neurotransmitters). Or some of both, I would think.2

In ancient times, in the surviving literature, you seldom saw outright references to madness. This is not because madness and insanity did not exist, but because people who were either born with faulty neural wiring or became ill through mistreatment and cumulative trauma died at a young age through starvation or misadventure, or were kept as a shameful secret in a back room of the castle or by the hearth in the family hut, or found some niche in society where a loose attention to reality was either no hindrance or an actual boon. Such a person might become a shaman, or an oracle such as the priestesses at Delphi, a spiritual leader, or if the person had a knack for jokes and storytelling, a king’s fool. Many positions in society benefit from a loose association with reality, an unusual or altered viewpoint or perspective, or the flights of fancy that others can interpret as being in touch with the gods, the future, or the forces that drive fate.

Certainly, it has been suspected that Joan of Arc was an undiagnosed schizophrenic, and the religious orientation of her voices and delusions fit in well with the needs of her king and her society during the Hundred Years’ War. And certain forms of autism and some personality disorders can be useful in giving a religious figure, a politician or war leader, or any kind of craftsman the fixity of purpose, the literal interpretation of facts and intentions, and the firm resolve needed for success. Such people are not usually easygoing or pleasant to be around, but in the right situation they can get the job done.

There are also degrees and variations of psychological conditions. A person may be “high functioning,” meaning that he or she can get along in society and manage not to be ostracized or killed while still dealing with a diagnosable condition. This realization comes under the heading of “Some people are just odd.” And again, some personality conditions, in the right niche, can prosper where the rest of us with conventionally “normal” viewpoints would flounder or suffer from distraction or terminal boredom.

When societies, particularly in Western Europe, became more crowded, urbanized, “civilized,” and socially conscious, the “crazy” people—perhaps pushed out of their comfort zone by the stresses of urban living—could neither find an acceptable niche in society nor survive with their diminished wits and skills. Then the concerned citizens wanted some decent provision to be made for their welfare if not for their declining mental health. So medieval hospitals like Saint Mary Bethlehem in London soon were repurposed as refuges or asylums for the insane and the incapacitated, usually under dispensation or with support from the crown. They were an unhealthy cross between hospitals with no positive medical regimen and debtors’ prisons from which there is no escape. The inmates were allowed to sit in their current condition, sometimes in their own filth, muttering or raving to themselves, with minimal care. Or they would be given over to experts and reformers who would attempt cures based on the latest moral or religious philosophies. “Bethlehem” became “Bedlam.”

The practicalities of warehousing the insane—out of sight of society and out of both its and the patient’s mind—developed along with science and medicine. Patients were eventually put in padded rooms and under restraint, and sometimes lobotomized, shocked, or otherwise physically altered, until the 1950s. Then the drug generically known as chlorpromazine, or Thorazine, was developed as an antipsychotic, an anxiolytic, and—well, actually, it’s a form of sedative. It is hard for a patient to “act out” and become a nuisance to the staff when his or her mind is in a deep fog. We have since developed other and better medications to specifically treat psychosis, depression, mania, anxiety, and the other specific symptoms of recognized diseases. These drugs—often prescribed in “cocktails” of two or three at once—mostly work on imbalances in the brain’s various neurotransmitters, which regulate communication across the synapses between nerve cells. All of these medications have some measurable side effects, although the newer versions are often less intrusive than the older models.

With proper medication over the long term, and with sufficient “talk therapy” to explain the patient’s condition to his or her satisfaction, a moderately to severely ill person can now become essentially “high functioning.”

Also in the 1950s, we began to see a change in the popular culture regarding “insanity” or “madness.” Stories and movies like The Snake Pit, Harvey, and One Flew Over the Cuckoo’s Nest told of innocent, eccentric, and sometimes just careless or carefree persons being railroaded into insane asylums, sometimes with the connivance of an uncaring judge or covetous relatives, and then fighting to get out. The fear of being incarcerated without cause or cure, like the Victorian fear of falling comatose and being prematurely buried alive, drove changes in society.

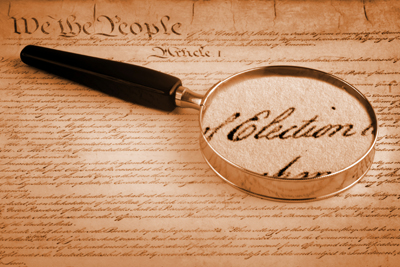

In California, the legislature passed the Lanterman-Petris-Short Act in 1967, which went into full effect in 1972; other states passed similar legislation at about the same time. The California act regulated involuntary civil commitment to a mental health institution. Basically, a person in any mental condition can only be held against his or her will for 72 hours (three days), and then only if he or she can be considered “a danger to self or others, or gravely disabled.”3 This is the premise of state’s Welfare & Institutions Code (WIC) 5150. After 72 hours, the person must be given a hearing before a judge, where the committing officer or county mental health representative can show through examination that the person is still dangerous or disabled; that may extend the commitment up to 14 days (WIC 5250). And after that, presumably with further treatment, another hearing can extend the hold for an additional 14 days, especially if the person is found to be suicidal (WIC 5260). And then, if the patient does not agree, he or she must promptly be released. This is why most court-ordered, mandatory treatments such as for alcohol or drug abuse are only for 28 days; after that, the person must either be charged under criminal statutes or released.4

This and similar legislation has the effect of going into the mental hospitals and asking, “Who here does not think they’re ill and so should be released?” And since anosognosia, or lack of insight into one’s own condition, is a symptom of most mental illnesses, the patients—whether they were wrongfully committed or not—all asked to be let go. In 1963, during the Kennedy Administration, the Community Mental Health Act was passed to provide local services for the mentally ill, allowing them to live in and become part of their local communities. But the act had no accompanying funding and remained an optimistic and hopeful ideal. In California, mental health services are funded and provided locally at the county level. Of course, it’s a lot more difficult, costly, and time-consuming to provide for people in the community on an individual, meet-and-greet, case-management basis than to assign them to a bed in a state hospital and care for them someplace far off in the hills. But that’s now the law.

Which means we are pretty much back to the pre-urban middle ages. Parents are caring for their severely disabled son or daughter, housing them in a back room, trying to get them the time-limited and inadequate care available under the county system or through private insurance, and eventually providing for their special needs with a modification in their wills that accommodates ongoing care and conservatorship after their own deaths without jeopardizing the disabled person’s access to public services. That, or the mentally disabled relative is turned loose by society to survive on the street as a homeless person, anesthetizing his or her mental condition with drugs and alcohol, scrounging in garbage cans for sustenance, and generally dying young.

But I don’t believe in end states. Every time, every system, every current condition is a point on a curve, in transition to either a better or a worse future state. And I believe that the thrust of science and technology—in this case, medical science, psychological understanding, advances in neurology and physiology, and pharmaceutical discoveries—is upward. That things will get better.

Our science will either improve the level of treatment available on an individual, personalized basis—perhaps with better medications, better understanding of disease processes, or therapies assisted by artificial intelligence—or we will develop more attractive, more socially acceptable, more comforting and caring treatment centers—perhaps on the basis of assisted living communities and therapeutic resorts. In either case, the under-funded, over-stressed, catch-as-catch-can approach of current community services cannot continue: the families caring for their wounded children will fail through exhaustion and revolt, and the society plagued with needles and feces in the streets and tent cities under its freeway overpasses will demand a better solution.5

One way or another, we must recognize, accept and care for the crazy people among us—or slip back into a dark age.

1. That is, as they say in Alcoholics Anonymous and other affected circles, the “chronically [or habitually] normal people.”

2. The one thing that cannot cause psychological injury is the sort of direct emotional and intellectual manipulation suggested by popular stories and movies of the early 20th century and referred to as “gaslighting.” You can intimidate people, confuse them, make them weary and angry, you can break down their social and emotional defenses, even cause them to lash out in fear and frustration—but you cannot “drive them [clinically] insane.”

Of course, through profound exposure to bad experiences such as a total reversal of a person’s life condition, a violent trauma, cumulative fatigue or injury, especially in wartime situations, and other severe dislocations, a person may suffer continuing emotional distress: post-traumatic stress disorder (PTSD). This is a real psychological condition with recognized symptoms and recommended treatments. It can happen to anyone. But still, the trauma has not driven the person “insane” in the old sense of the word: laughing manically, cackling inanely, and seeing phantasms.

3. And it has been decided judicially that a person who can only feed him- or herself by scrounging in a garbage can does not fit the definition of “gravely disabled.”

4. Two exceptions exist. At the first 14-day hold, a person deemed to be gravely disabled due to mental illness (WIC 5270) may be held for an additional 30 days. And a person who has made a serious threat of substantial physical harm to another person (WIC 5300) may be held for an additional 180 days.

5. We are already seeing a backlash in the form of Laura’s Law, Assembly Bill 1421 from 2002 in California, inspired by a young woman killed by a man who had refused psychiatric treatment. The law allows for court-ordered Assisted Outpatient Treatment (AOT) for people with severe mental illness. It is based on a similar statute in New York State and is being adopted elsewhere.