|

This is my brain following a random path toward a real thought. So bear with me. What is a clown?

I mean really, what do the full image, aspect, and persona of a clown represent? Are clowns a distinct race of mythical beings? Certainly, they are always a human person dressed up in a particular style. But are clowns representations of imaginary figures, like the black-robed, hooded figure of the Grim Reaper with his scythe? (And why is Death always a male?) Are they like the kachina spirits of the Native Americans? Clown makeup and costumes are varied—in the professional circus, a performer’s face painting is even registered and protected—but the genus or type is always recognized. If I mention a red bulb nose, orange string wig, and absurdly long shoes, you know that I’m speaking about some kind of clown.

The actual presentation of the modern circus and rodeo clowns goes back to the Italian Commedia dell’arte of the 1500s. The theater companies back then employed eight to ten stock characters, each with specific features of face and costume, so that the audience would know what to expect. Not all all of the characters were meant to be funny, but all had their place in familiar human situations that were always played for a laugh. And the servant characters—Pierrot, Harlequin, and Pulcinella—were generally the perpetrators of madness. That is, clowns.

Still, over time, and with the aid of the traveling circus in both Europe and North America, the clown itself has become a stock figure. Under the Big Top, clowns provide comic relief between the more daring and dangerous acts like the lion tamers and acrobats. On the rodeo circuit, clowns rush into the action to distract a loose horse or bull and protect the riders. Clowns are now physical actors with no actual speaking parts. They are visually funny while other comedians make jokes with their words and facial expressions.

And for some people, clowns are scary. Clowns are made up to be exotic and absurd. They pantomime humor but with a subtle edge of intent, sometimes of meanness—as all humor can be used meanly, to ridicule and to hurt. To some sensibilities, the exaggerated lines and shapes drawn on an otherwise human face, the essence of a mask to hide the underlying identity, are disturbing. Perhaps the best representation of this feeling of dread is the evil smile of Pennywise the Clown—not really a clown or a human being at all—in Stephen King’s novel It.

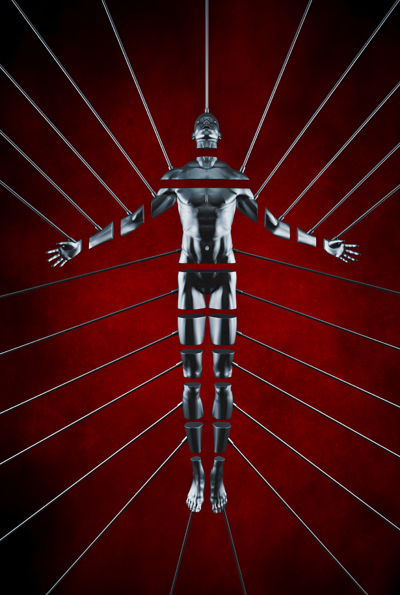

What makes us feel uneasy about clowns is also what makes us uneasy at a masquerade ball or a Halloween party. Or, let’s face it, with the whole concept of actors and acting, and why they have been disrespected as a profession—praised but not generally trusted—by polite society. We are accustomed in our daily lives to seeing a person’s face and believing we can tell what that person is thinking and feeling, who they are, and what they will do. We believe that the eyes are the “windows of the soul” and that we can read meaning there. We also believe we can trust smiles and laughs in the people we meet. Acting hides this. Masks hide this. Masks not only conceal identity but they also remove humanity. And a clown’s heavy and exaggerated makeup is more of a mask than the powder, eye shadow, and lipstick that many women put on in the morning.

And there, for many of us, is the difficulty when we encounter a transgender person, a transvestite or a drag queen,1 or even a markedly effeminate man or masculine woman. Our sense that we can tell a person’s true being just by looking at him or her is skewed.

We generally take a person’s sex—male or female, pick one—to be an essential part of their character. We consider it the base, ground-level, first-order characteristic of a person’s makeup. Check this box first: man or woman? And a man made up and dressed to look like a woman, or who acts like and believes he is a woman, befuddles this sense. Even if he has had hormone changes and surgeries so that in some physical dimensions he matches a female body type—or a woman who has undergone similar changes to emulate a male body—we still feel that something is amiss. The width of the hips, the ratio of body fat, some subtle missing part of the whole presentation cues us to the fact that what we are looking at is not what we were led to expect.

When we see a clown, we know that we are not looking at a separate species of being, but a human person who has put on grease paint, string wig, and floppy shoes. We accept the change as striving for a particular type of presentation. But when we see a man sculpted and painted like a woman, or a woman pared and groomed like a man, the presentation strikes deeper into our awareness.

It doesn’t just confuse and disturb us. It makes us feel that our sense of basic human nature has been betrayed. It makes us feel threatened.

1. And are not drag queens sometimes played for comic effect? Certainly, their heavy makeup and exaggerated characteristics are generally played for laughs.